How to Think About Evolving a System

One excerpt from Clean Code that has remained with me over the years is a section discussing the challenges related to refactoring code. When a company typically embarks on this process, they often create a separate team - the book referred to them as the tiger team - to construct the new code or infrastructure. What tends to happen is that the old infrastructure continues to add more features and evolve forward - to meet company roadmaps and customer needs - and the tiger team is always in a perpetual state of catch up. In many cases the tiger team builds for years and is never able to successfully migrate to the new infrastructure, or corners are cut in order to get it done sooner. This is why I never opt for full rebuilds, but step by step iterative modifications in the existing code base. This work is largely driven through system feature requests. For example, spending an extra 10% on a feature to rebuild parts of the code base the feature touches focused on what the future implementation state is desired to be. This is not easy and takes a lot of experience and planning to do effectively. It takes veterans of the process who have done it over and over again to execute properly.

No two infrastructures are exactly the same and not everyones challenges are the same. So this is article is by no means the definitive how to guide. It can however, be viewed as a process way of thinking that can be incorporated into any refactor. So if starting with a monolith, how does one effectively transition to MicroServices. Below I’ll walk through how with good planning one can transition efficiently from a monolith to microservices. This discussion is omitting peripheral scaffolding like event systems, testing infrastructure, transaction management, role based access control, etc.. It is reduced to only focusing on transitioning business logic from monoliths to microservices to avoid over complicating the discussion.

Monolith

For anyone building a webapp from 2005-2015, you probably at some point worked on something like this. In this monolith pattern you have a series of controllers that route requests to the appropriate business logic guided by a data interfaces defined in the model layer. This is not a typical MVC pattern since there is an exposed API instead of templated HTML in the view, but none the less it should be familiar to most engineers. If you are starting with this architecture today, I suggest immediately considering transitioning to the next level below.

Monolith + Modules

For companies with a small engineering team or lack of dedicated Platform or SRE team this is probably the best starting place. The design pattern is to utilize modules as if they were microservices running in a shared environment. Furthermore, you can abstract shared code between the modules into a root level library or as published packages (to a package or library manager) that can installed into the modules. The general rule is that modules cannot import code from each other and should communicate with each other using gRPC. GRPC is preferred over rest because it forms strict contracts between modules behaving in a way that is almost like they are invoking each other’s functions through their controllers (though this is not exactly whats going, its a reductive way of thinking about gRPC vs REST). However, if you haven’t worked with gRPC a simpler way is to have the modules simply call each other by calling the monoliths own API. This will have negative impacts on latency, but depending on what you are building and the skill of the team this can be acceptable trade off even if considered not a optimal design pattern.

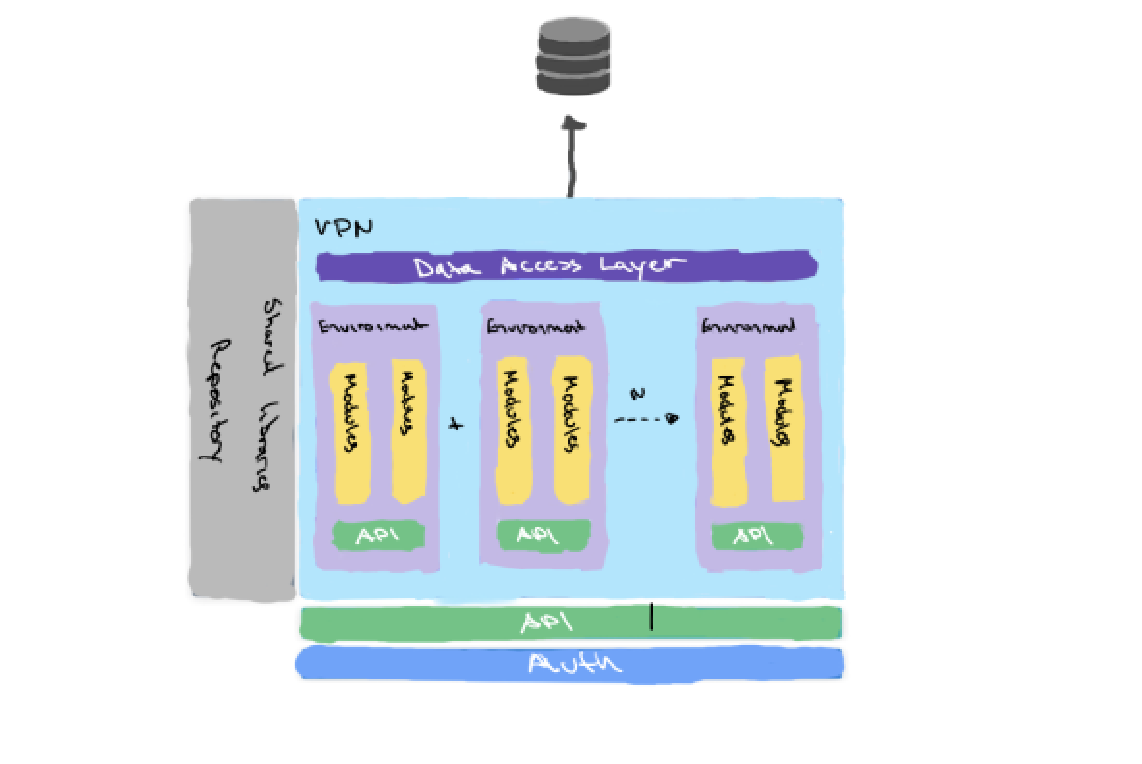

Microservices + Modules

This is where we finally transition to microservices. If the previous step was followed properly this transition should be effortless. The reason being is that modules can simply be removed from their current environment into a new blank one. Using gRPC or REST, the inter module communication should be preserved with only some minor adjustments. At this point we would need to remove shared libraries to a package manager if not already done so, and we would need to introduce an API Gateway to create a single point of communication with all the services.

This next step is a subtle transition but an important one. I often recommend ensuring a single data access layer as a precursor to service specific databases. This is because one way to ensure slowing your engineering team down is to restrict data access to isolated services. The reason being is that as your application grows so does the information architecture of your data. A data access layer provides a uniform point of access for all your data sources. In this design we are only referencing the applications main database, but most companies have more than one database. Different databases are used for storing different types of data and a well-designed Data Access Layer as a separate service means services don’t need to implement their own communication modules for data. Additionally it provides full observability to the schema designs since they all must be contained within the DAL which acts as both schema and migration manager as well.

Microservices + Modules + Multiple Databases

Above is the design for service specific databases. One rule I like to implement (and can be permissioned through the DAL) is that any service can read data from any other services database but only the service which owns that databases domain can write to it. Reads don’t alter the data and so its safe for services to read from each other and this greatly improves development velocity since reads are overwhelmingly the primary database application. This way a service can read data without requiring an API being exposed for that data in the service which manages that datas domain. Writes stay restricted to the service which manages the domain to ensure safe and secure writes as that service and the team which develops it, has the most invested interest in ensuring the data quality.

Microservices + Modules + DAL + Multiple Databases.

The above diagram is how we view the modern microservice architecture. At this stage you have microservices with a dedicated database per service (or if using postgres you can use a single database server with isolated schemas per domain). I rarely recommend any organization reach this level without the appropriate Platform and SRE operations in place. This level of microservice architecture is best reserved for companies with multiple product lines where many services and databases never access each other, or, if data integrity and security is of the upmost importance (financial applications for example).